Imagine watching a Britney Spears concert on TV—and you think, “I want to pause this, and have Britney do something else.” In this article, we’ll explore how image to video Ai technology enables us to do just that.

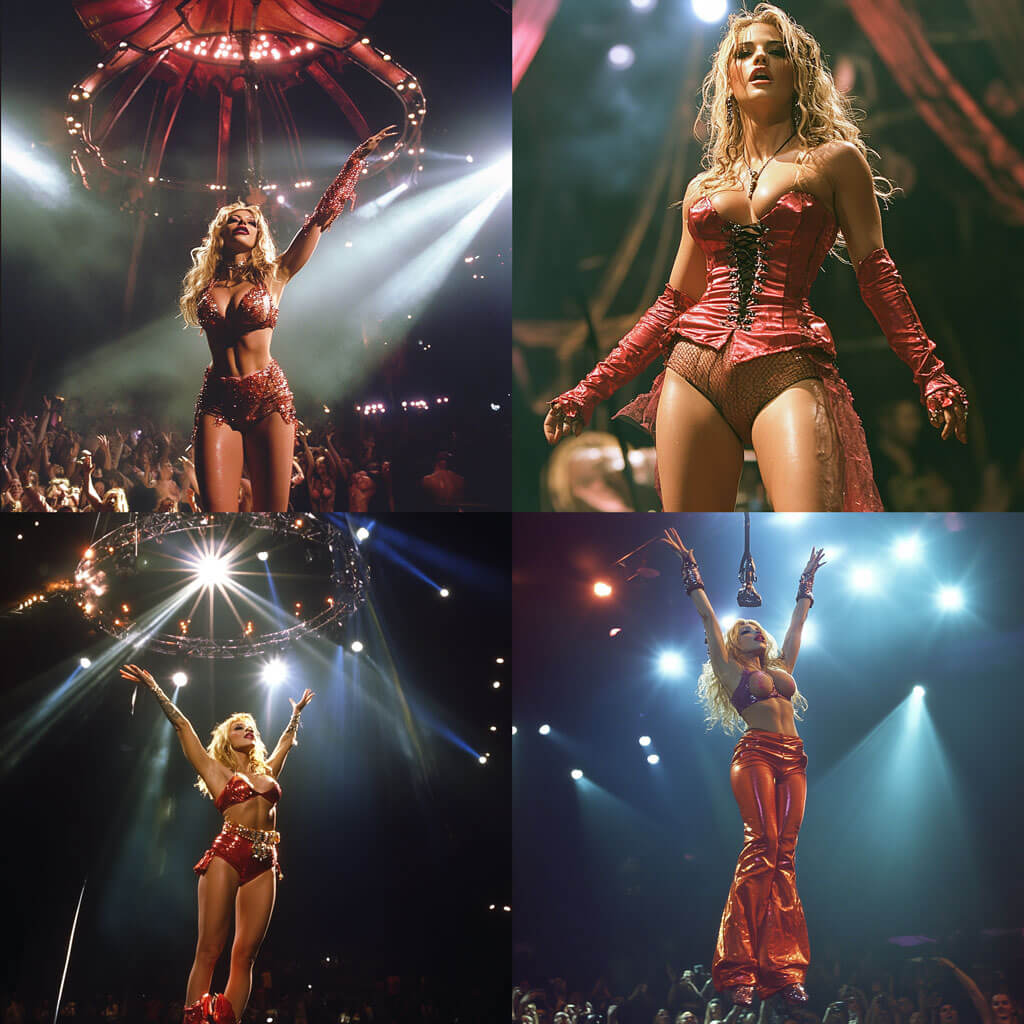

First, We’ll Need An Image

We began by using MidJourney, a text-to-image generator, to create an image based on the phrase “Britney Spears performing on stage during the Circus tour.”

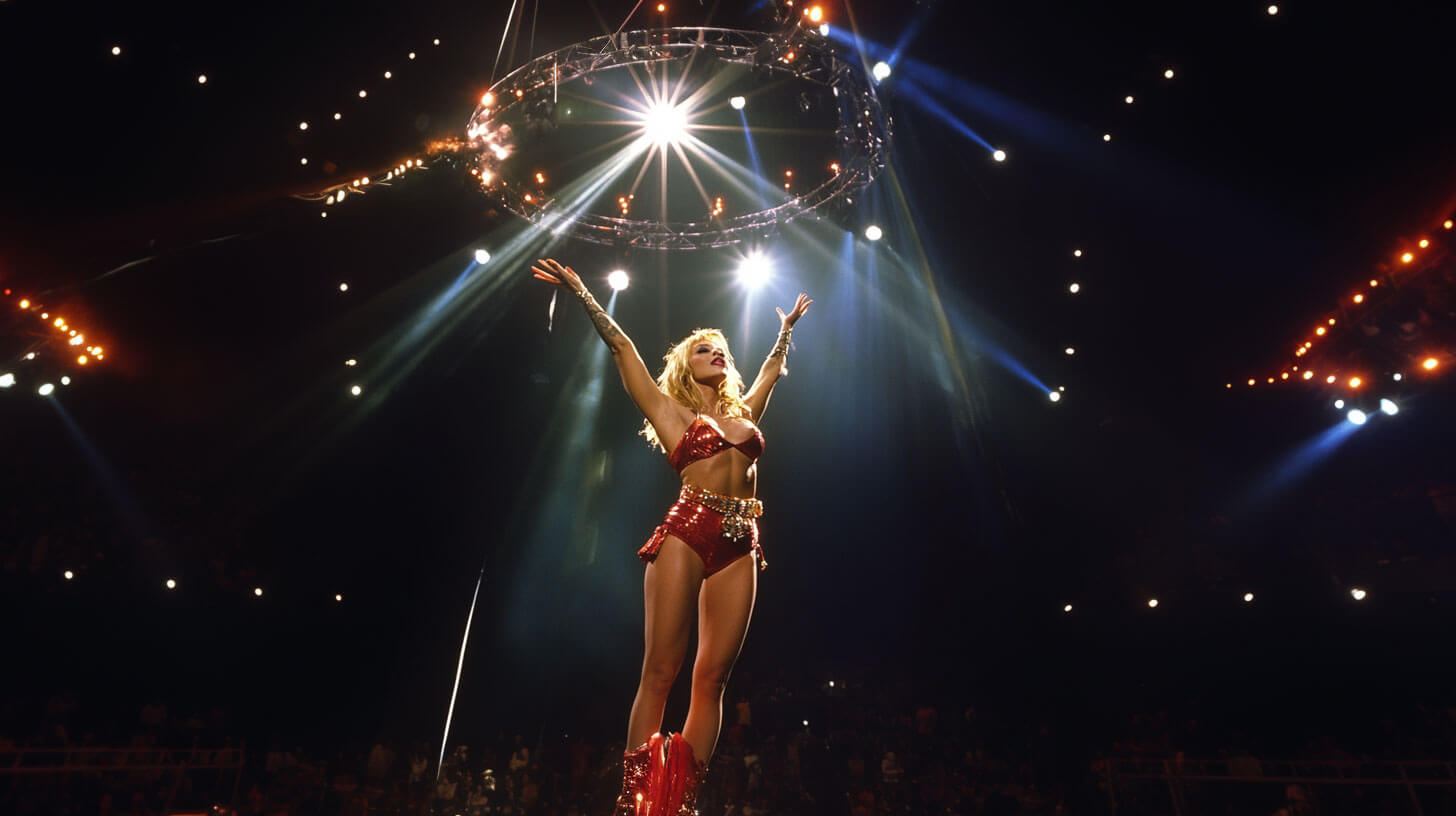

From the four images generated by Midjourney, we selected one, and widened it, and upscaled it.

We want a high-resolution image (up to 20 mb) so that Hailuo’s image-to-video ai has sufficient pictorial information to work with.

Next, We’ll Make The Video

Within the Poe app, we’ll use HailUo Ai’s image to video AI generator, and we’ll provide it our starting image.

In addition to uploading our image, we’ll also input a simple prompt to Hailuo:

Prompt: “Britney Spears performing on stage during the Circus tour.”

With the combination of image + text prompt, Hailuo can deliver a 4-second video clip, after about 600 seconds (10 minutes) of computation,

Key Takeaway:

The initial clip demonstrates how image to video AI can animate a static image based on a straightforward prompt, resulting in a short video that brings the image to life.

Exploring Different Prompts: Image Prompt 1A

Using the same starting image, we adjusted our prompt to guide the AI in creating a different video. This time, the prompt was:

Prompt: “A dramatic zoom-out shot featuring Britney Spears in Las Vegas. Britney Spears does an enthusiastic and fun dance in her unique costume.”

The result was a new 4-second video clip where the camera action and Britney’s movements changed according to the prompt.

Key Takeaway:

By altering the prompt while keeping the same starting image, the image to video AI generates a different video, showcasing the impact of detailed prompts on the final output.

Creating a Sequence: Image Prompt 1B

To create a longer sequence, we generated multiple video clips and stitched them together using a video editor.

- First Clip: We started with our initial image and a prompt directing Britney to take off on a rope and skydive around the arena.

Prompt: “Britney Spears takes off on a rope and skydives around the arena.”

After the video was generated, we took the last frame of this clip to use as the starting image for the next clip (video-editor software should help you with this task.)

- Second Clip: Using the final frame of the first clip, we prompted the AI for Britney to hang out with fans and crowd surf.

Prompt: “Britney Spears hangs out with fans and does a crowd surf.”

Again, we used the last frame for the next prompt.

- Third Clip: Continuing the process, we directed Britney to do a fun dance, and have the camera move as in a drone shot.

Prompt: “Britney Spears performs a fun dance captured by a drone shot.”

By repeating this method, we created a series of connected clips that formed a somewhat-cohesive video sequence.

Key Takeaway:

By using the last frame of each video as the starting image for the next prompt, we can create extended video sequences with image to video AI, allowing for more complex storytelling.

Building a Longer Concept Video: Image Prompt 1C

Taking this approach further, five clips comprise our final concept video.

Each clip was generated using HailUo AI with specific prompts directing the action, camera movements, and transitions.

The Process Involved:

- Clip 1: Start with the initial image and the prompt:

Prompt: “Britney Spears rides a rope and skydives around the arena.”

- Clip 2: Use the last frame of Clip 1 as the new starting image.

Prompt: “Britney jumps into the fans and enjoys a fun crowd-surfing experience.”

- Clip 3: Using the last frame of Clip 2

Prompt: “Britney performs an energetic dance as a drone captures the moment.”

- Clip 4: Continue with the last frame of Clip 3

Prompt: “Britney goes to the edge of the stage and waves to the crowd.”

- Clip 5: Conclude the sequence with the last frame of Clip 4

Prompt: “Britney brings an excited fan on stage for a special performance.”

After generating each clip, we compiled them using a video editor to create a seamless concept video.

Key Takeaway:

Combining multiple clips generated by image to video AI allows for creating more elaborate videos, simulating the process of directing and editing a traditional video shoot.

How to Achieve This with Image to Video AI

By following these steps, you can create your own dynamic videos using image to video AI technology:

- Choose a Starting Image: Upload a real photo, or use a text-to-image generator like MidJourney, Flux, or anything else to create your initial image.

- Input into HailUo AI: Use the starting image in HailUo AI within Poe AI.

- Craft Your Prompt: Write a detailed prompt that guides the AI on what happens in the finished video.

- Generate the Video: Allow the AI to process and create the video clip.

- Iterate for Sequences: For longer videos, use the last frame of each generated clip as the starting image for the next prompt.

- Compile the Clips: Use a video editor to stitch the clips together into a cohesive sequence.

Conclusion

The advancements in image to video AI technology, as demonstrated by HailUo AI within Poe AI, open up new possibilities for content creation.

By starting with a simple image and using detailed prompts, we can generate dynamic videos that bring our visions to life.

Whether you’re a filmmaker, enthusiast, or any kind of storyteller, by experimenting with image to video AI tools like these, you’ll be able to explore creative ideas quickly and efficiently.

As the technology continues to evolve, we can anticipate even more sophisticated and user-friendly applications in the near future.

Suggested Articles:

- Text to Video AI with Poe Learn about text-to-video AI in Poe using Hailuo and Pika. Compare video examples and discover how to create dynamic videos from text prompts and images.

- How To Learn Animation In 45 Seconds Learn the basics of stop motion animation with clay using your smartphone. Discover tips for smoother animation and explore the Stop Motion Studio app.

- Stop Motion Animation – How To Start Learn the basics of stop motion animation. Discover how to plan your movie, animate objects, use timelapse and matte painting, and edit your final product.

Excellent though conveniently concise doc.

It helped me a great deal.

I would recommend Shotcut to export frames and compose videos.

WebP format worked best for me.

Question: is there a site where you can lookup what –parameters to be used by a certain bot in POE?

Thank you, and that’s a great tip about the Shortcut creation.

Regarding the parameter control available by the image / video generation bots, I’ve noticed that only *some* of Poe’s bots actually list their parameter controls.

In Poe, within the bot, there will be a short description with the clickable ‘view more’. Clicking that will generally list it’s parameters (if offered).

Here’s Pika 1.5 (which is a Poe-created image-to-video ai) and it’s parameters are listed, after I clicked the ‘more info’ button:

“Additional parameters:

–pikaffect (squish, explode, crush, inflate, cake-ify, melt)

–aspect (e.g. 16:9, 19:13)

–framerate (between 8-24)

–motion (motion intensity, between 0-4)

–gs (guidance scale/relevance to text, between 8-24)

–no (negative prompt, e.g. ugly, scary)

–seed (e.g. 12345)

Camera control (use one at a time):

–zoom (“in” or “out”)

–pan (“left” or “right”)

–tilt (“up” or “down”)

–rotate (“cw” or “ccw”)”